Current Research

Open-Vocabulary Gaussian Splatting

Fixed-class 3D perception limits scene understanding. We combine large language models, generative AI, and open-vocabulary Gaussian Splatting to enable free-form queries over 3D reconstructions. This approach supports flexible, multi-view reasoning for robotics, AR/VR, and intelligent environment monitoring.

Recently Completed

LUNA: Low-Light Geometry-Aware Panoptic Lifting

Low-light noise and blur break assumptions of panoptic reconstruction methods. We extend Panoptic Lifting with depth cues and adaptive multitask optimization to improve stability under degraded inputs. LUNA outperforms baselines in segmentation and reconstruction across all noise and blur levels on a degraded Replica dataset. For more details: Project Page

Affordance Grounding with Language Embedded Radiance Field

Grounding object affordances in 3D scenes is often supervision-heavy. In this research, we demonstrate a compact language model with LERF to parse free-form queries into CLIP-aligned affordance representations to generate reconstructions. Our approach achieves overall high query accuracy for intuitive, high-performance 3D interaction. For more details: Project Page

Tree-Sight: Transforming tree phenotyping for future forests

Real-world 3D LiDAR segmentation for forest analysis is limited by the scarcity of annotated datasets. We address this by generating high-fidelity synthetic LiDAR scans of forest trees with unsupervised domain adaptation to train models for part segmentation. Our approach achieves competitive performance with real-data-trained models while reducing the need for costly manual annotation.

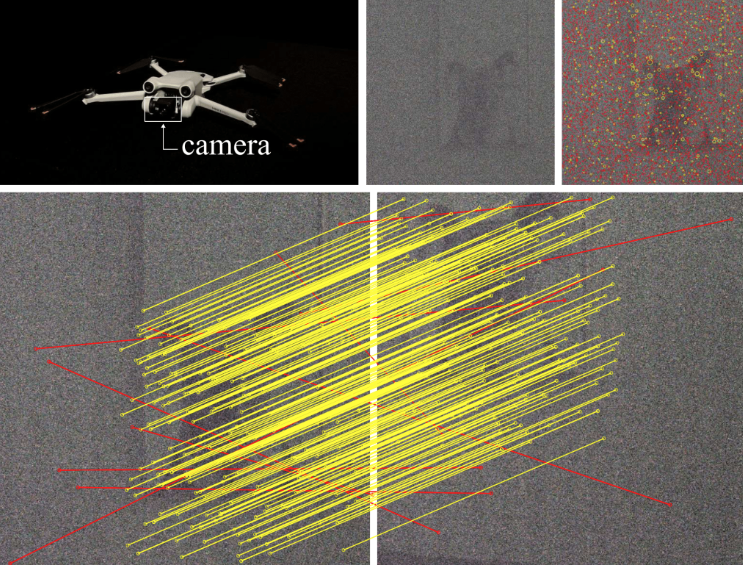

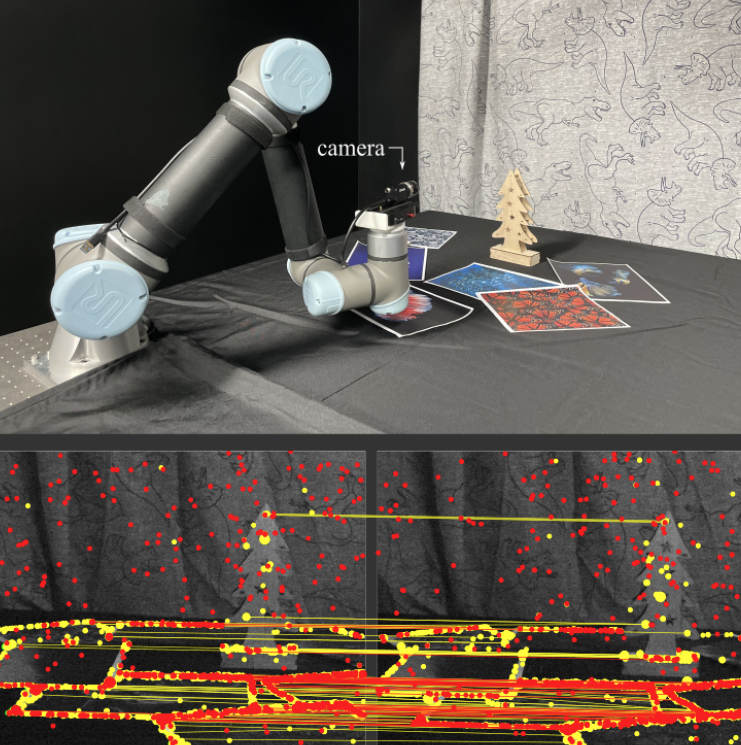

Learning-based Burst Feature Finder for Light-Constrained Reconstruction

Low-light drone imaging suffers from noise, limiting reliable feature detection for 3D reconstruction. We present LBurst, a learning-based burst feature finder that jointly detects and describes noise-tolerant features by leveraging consistent motion and uncorrelated noise across image bursts. Our method outperforms single-image and existing burst-based approaches in millilux conditions, enabling improved nighttime drone applications from delivery to wildlife monitoring. For more details: Project Page

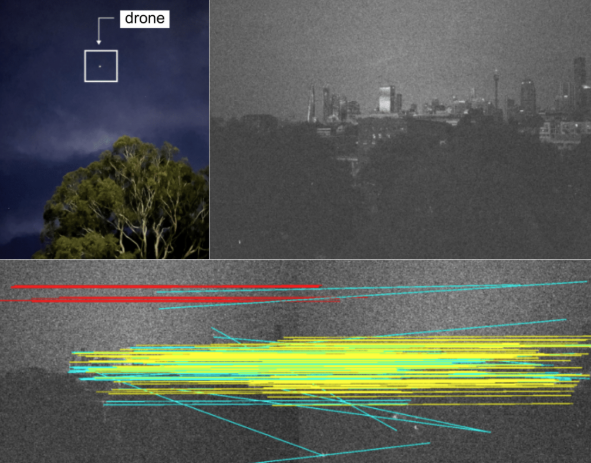

BuFF: Burst Feature Finder for Light-Constrained Reconstruction

Robots operating at night using conventional cameras face significant challenges in reconstruction due to noise-limited images. In this work, we develop a novel feature detector that operates directly on image bursts to enhance vision-based reconstruction under extremely low-light conditions. We demonstrate improved feature performance, camera pose estimates and structure-from-motion performance using our feature finder in challenging light-constrained scenes. For more details: Project Page

Burst Imaging for Light-Constrained Structure from Motion

Images captured under extremely low light conditions are noise-limited, which can cause existing robotic vision algorithms to fail. We develop an image processing technique for aiding 3D reconstruction from images acquired in low light conditions. Our technique, based on burst photography, uses direct methods for image registration within bursts of short exposure time images to improve the robustness and accuracy of feature-based structure-from-motion (SfM). For more details: Project Page

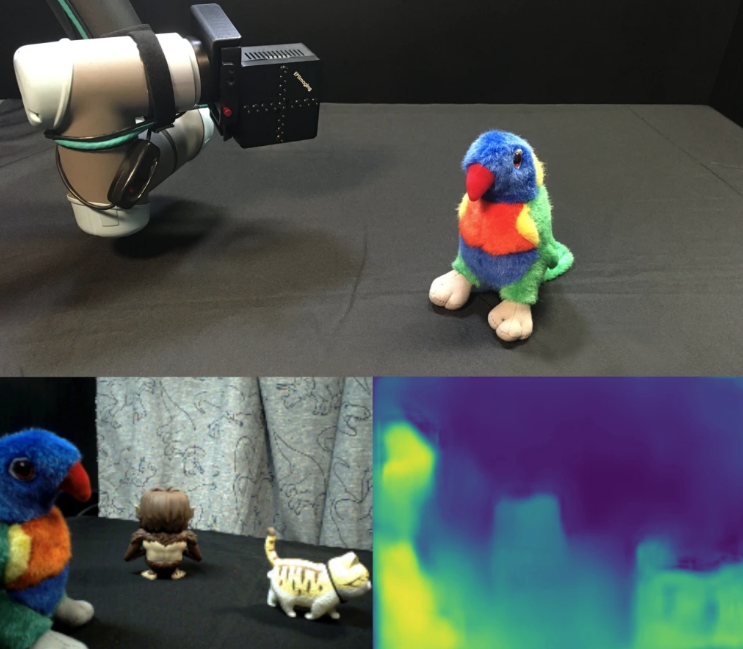

Unsupervised Learning of Depth Estimation and Visual Odometry for Sparse Light Field Cameras

While an exciting diversity of new imaging devices is emerging that could dramatically improve robotic perception, the challenges of calibrating and interpreting these cameras have limited their uptake in the robotics community. In this work we generalise techniques from unsupervised learning to allow a robot to autonomously interpret new kinds of cameras. We consider emerging sparse light field (LF) cameras, which capture a subset of the 4D LF function describing the set of light rays passing through a plane. We introduce a generalised encoding of sparse LFs that allows unsupervised learning of odometry and depth. For more details: Project Page

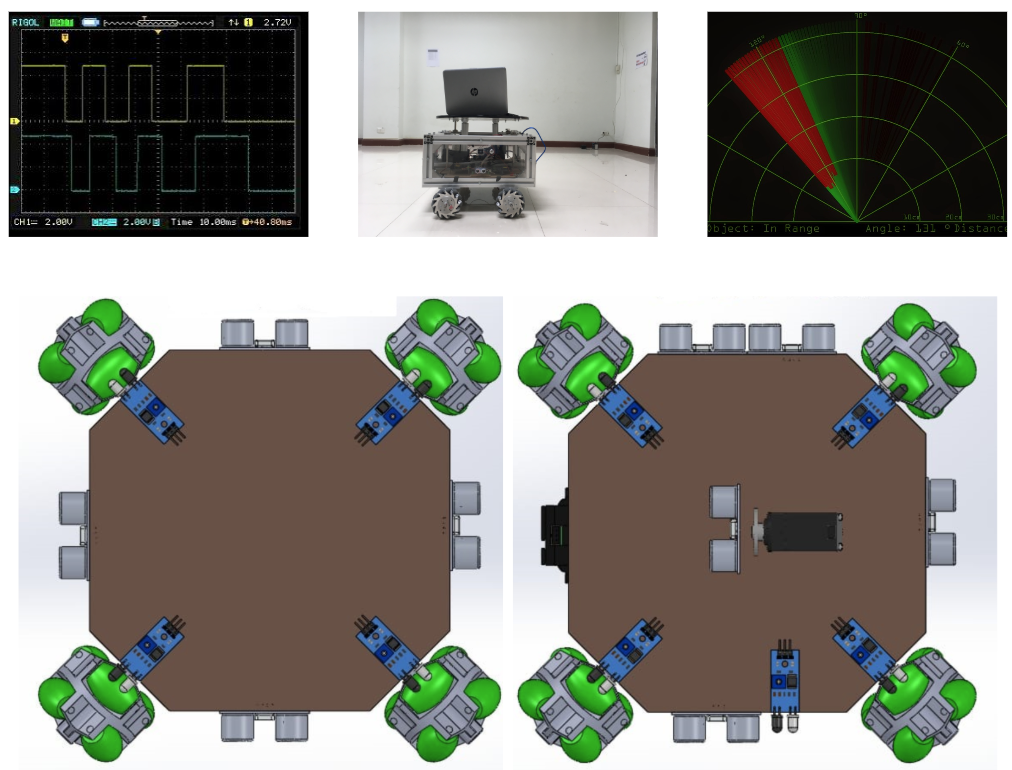

Design and Development of an Industrial Mobile Robot for Educational Studies

Thailand 4.0, an adapted economic model of Industry 4.0, aims to unlock the country from several economic challenges through knowledge, technology, and innovation. In this research, we focus on designing and developing an industrial standard omnidirectional obstacle avoiding warehouse mobile robot. We enable autonomous navigation using simultaneous localization and mapping (SLAM) with a real-time obstacle recognition system using machine learning techniques.

We perform analysis on reliability, complexity and sensor fusion technologies of low cost sensors and replicate all the possible functionalities of the industrial robot on a small-scale robot for educational studies. AutoCAD, SolidWorks, Arduino, Raspberry Pi, ROS, HTML, Python, MATLAB, TensorFlow and OpenCV were used during different stages of research and development.

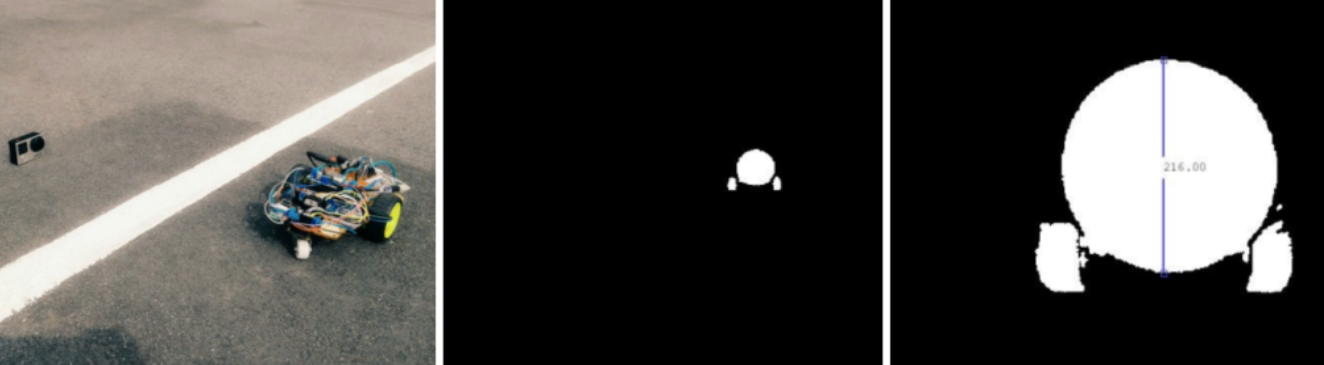

Construction of a Robot Navigation Simulator using Imaging Techniques

Localization of a robot is a fundamental challenge in autonomous navigation, obstacle avoidance and motion tracking, especially over a slope. Recent advances in visual odometry allow robots to utilize sequential camera images to estimate the position of the robot relative to a known position. In this research, we develop a statistical model to calculate the velocity of a robot on an unknown terrain using visual odometry.

We design and develop a mobile robot to be our controlled target and utilize a GoPro camera to get visual cues from the target. We operate our robot outdoors and with an aid of multiple computer vision techniques, measure the position of the robot. We calculate the velocity estimation from encoders and visual sensors and perform experimental analysis to derive statistical relationship between slip, friction and velocity of the robot.

Our navigation simulator based on the statistical model exhibits similar performance to our robot.

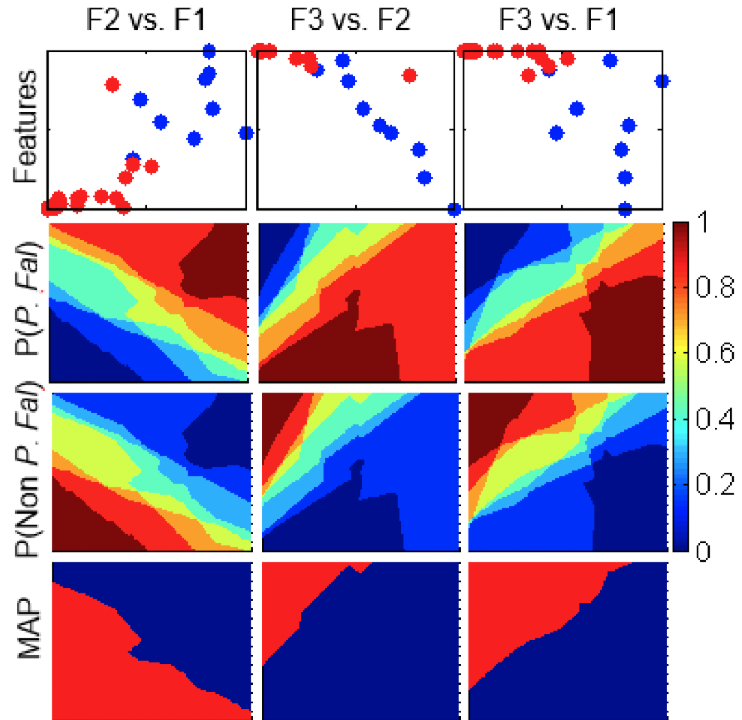

Automatic Detection of Malarial Parasites in Human Blood Smear

Malaria is a globally widespread mosquito-borne disease which is caused by Plasmodium parasite. Although blood films are stained for better visualisation through the microscope, the automatic classification is challenging due to the presence of all kinds of parasites in various orientations and other artefacts in the blood film. In this research, we determine the presence of the most critical parasite -Gametocyte stage of Plasmodium falciparum – in Giemsa-stained blood films using photomicrograph analysis method. Having extracted the parasite from the background of the image after a series of pre-processing operations, we use both K-nearest neighbors (K-NN) and Gaussian naïve Bayes classifiers. As the key element of the research, we utilize moment invariant features to make the input features invariant to translation, rotation and scale (TRS). As this application desires a higher true positive rate, we conclude Gaussian naïve Bayes as the qualified classifier based on leave-one-out cross-validation.